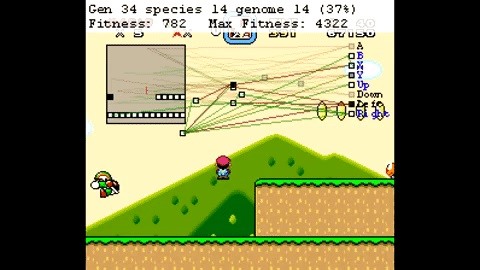

As we have seen previously with the likes of SethBling's Mar I/O videos and other examples, video games seem to be a great source for training AI neural networks. Augmented reality and machine learning are part of a collection of technologies that seem to be growing toward a point of maturity, and that will likely cause them to be intertwined for the foreseeable future.

As developers, machine learning will definitely change the way we create software in the coming future. Instead of going line-by-line through code to create our next killer app, we will instead likely set the parameters and determine the training regimen for basic AI. And really, that future is likely not as far away as it may seem.

In a blog post this week, Unity announced the release of the what they are calling Machine Learning Agents, or ML-agents for short. This Python-based API incorporates TensorFlow, the popular open-source machine learning library. This beta SDK, also falling into the open-source category, is being released with a few sample projects and some basic algorithms to get interested developers started.

Through the offering of rewards for wanted behaviors and removing rewards for unwanted behaviors, training these AI agents is very much on par with training an animal or retail sales team. Though likely much faster due to iteration speed.

The ML-Agents SDK is built on 3 primary objects:

Agents are the action element in the grouping. A single algorithm can be made up of a number of agents with potentially different sets of rewards for various behaviors.

Brains conduct the decision-making process that sends agents into action. Brains can be put into one of four modes: external, internal, player, and heuristic.

Academy is the object that holds the various brains of a specific project.

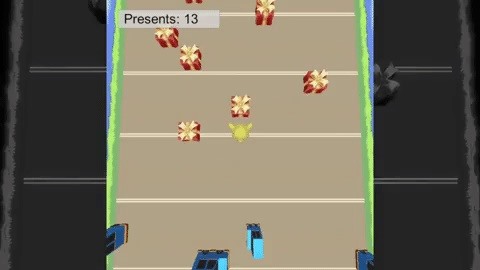

In the animation below, you see a fresh agent training on what looks like a simple Frogger-type game. As you can see, its early efforts are rough.

But after 6 hours of training, it has learned how to maneuver and can go long distances, collecting many presents, without issue.

The example above is a based on the single-agent scenario, or a single agent linked to a single brain. This is one of the many potential scenarios, including simultaneous single-agent, cooperative multi-agent, competitive multi-agent, ecosystem, and, in the video below, simultaneous single-agent.

Computer vision, a popular use of machine learning in the AR space that uses images to train AI agents to see and interpret images and video, is one of many forms of machine learning that will likely benefit from this type of project.

I will be interested to see where we can incorporate more of this into AR, and I am looking forward to seeing what the community comes up with, as well as what comes out of Unite Austin in a couple of weeks.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

Be the First to Comment

Share Your Thoughts