After joining Google and Huawei in underwriting the UW Reality Lab at the University of Washington in January, it appears Facebook is already seeing a return on its donation.

According to results published earlier this month through Cornell University's arXiv service in a paper titled, "Photo Wake-Up: 3D Character Animation from a Single Photo," a team of Facebook and University of Washington researchers have developed a method of generating animated 3D models in augmented reality using only a photograph.

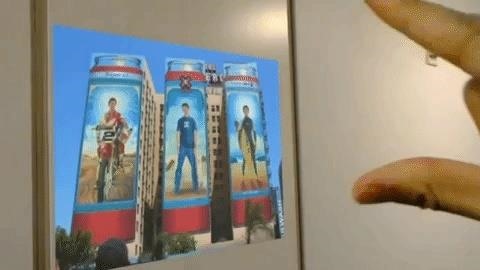

The dynamic works by fitting a morphable body model to an image and estimating a body map on that model. With the mapped model, the system constructs a 3D mesh, applies textures to the mesh that match the body map, and integrates a skeletal rig for controlling the motion of the figure. From this reconstruction, the system can appear to extract a 3D figure from a photo. The research team claims that the system works with photos, cartoon figures, and even abstract paintings.

- Don't Miss: Snapchat & Facebook AR Experiences Let You Unleash Your Inner Anti-Hero & Become Marvel's Venom

The demo video for the system shows a HoloLens user watching a figure from a Picasso painting escape from the canvas, an extreme athlete bursting forth from an advertisement, and Paul McCartney strutting out of the album cover of the Beatles album Help!

The team published their results through Cornell University's arXiv service in a paper titled, "Photo Wake-Up: 3D Character Animation from a Single Photo." Facebook research scientist Ira Kemelmacher-Shlizerman, also an assistant professor in the Paul G. Allen School of Computer Science and Engineering at the University of Washington and founder and co-director of the UW Reality Lab, co-authored the research with Brian Curless, a professor at the Allen School and director of the UW Reality Lab, and Chung-Yi, a doctoral student at the university who also worked at Facebook as a research intern in summer 2018.

Kemelmacher-Shlizerman joined Facebook when the company acquired her startup, Dreambit, and her Moving Portraits research now resides at Google. Curless's research on 3D surface reconstruction contributed to the environmental mapping algorithms in Microsoft HoloLens and Google's Project Tango. The UW Reality Lab faculty members previously collaborated on a similar project that reconstructed soccer videos as augmented reality replays on tabletops.

As noted in the limitations section of the paper, the results in practice are a little rough around the edges (which actually works in favor of abstract art), but the computer vision approach aligns with Facebook's research into full-body AR masks and the image recognition and other capabilities of its Spark AR platform.

However, as it stands, the research team has only demonstrated the AR experience via HoloLens, though their description of the method does not specify that the depth sensors of the headset are required.

So while the technology may not immediately find its way onto Spark AR platform, or Google's mobile AR efforts, eventually, the aforementioned companies stand to benefit from the research made possible by their donations to the UW Reality Lab.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

Be the First to Comment

Share Your Thoughts