Like clockwork, Apple has unveiled the latest additions to its ARKit tookit at the annual Worldwide Developers Conference, where ARKit first said hello to the world in 2017, as well as some new tools that take a direct shot at Unity, Unreal Engine, and others.

Coming to iOS 13 this fall, ARKit 3 will support people occlusion and motion capture. The latter will enable the camera to recognize people who are present in a scene and place virtual content behind and in front of them, while the former gives apps the ability to track moving bodies.

In addition, ARKit 3 gives apps the ability to track up to three faces via the front camera and support for front and back camera simultaneous capture. Apple is also introducing collaborative sessions, which makes the process of initiating a shared AR experience faster.

To demonstrate ARKit 3.0, Apple brought Mojang, the publisher of Minecraft and subsidiary of Microsoft, to show off the first live demonstration of Minecraft Earth.

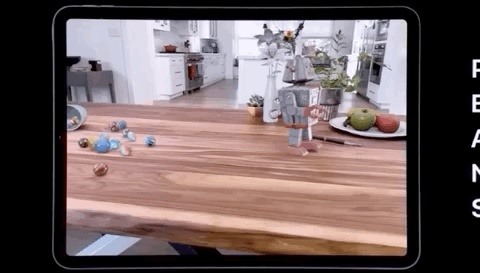

But that's not all. Before showing off the new features of ARKit, Apple introduced two new development tools, RealityKit and Reality Composer. Using the RealityKit Swift API, developers can take advantage of high-quality 3D renders, environmental mapping, noise and motion blur, animation tools, physics simulation, and spatial audio to make their AR experiences more realistic.

Available for iOS, the new iPadOS, and Mac, RealityComposer gives developers who don't have experience with 3D the means to create AR experiences. With a drag-and-drop interface and a library of objects, developers can build an AR experience visually for use in an app via Xcode or a webpage though AR Quick Look.

"The new app development technologies unveiled today make app development faster, easier and more fun for developers, and represent the future of app creation across all Apple platforms," said Craig Federighi, Apple's senior vice president of software engineering, in a statement.

The original version of ARKit in iOS 11 was groundbreaking, bringing surface detection, markerless tracking, and ambient lighting to mobile augmented reality experiences. Developers were quick to show off what they could do with ARKit in beta, sharing demos of AR portals, virtual pets, upgraded location-based gaming, Alexa integration, and more ahead of the public release in Sept. 2017.

And while ARKit 2.0 in iOS 12 brought powerful new features like multiplayer experiences, persistent content, object recognition, and support for web-based AR experiences, developers have not been as aggressive in adopting the new capabilities. Not even the multiplayer arcade app from Directive Games that Apple demonstrated on stage during its annual iPhone launch event has hit the App Store yet.

With the release of ARKit 3.0, Apple brings another new set of features that give mobile AR experiences another boost in immersion. In fact, Apple can now match some of the features that AR cloud platform makers like Niantic and 6D.ai have been testing over the past year.

But what good are these superpowers without adoption from developers and app publishers? RealityKit and RealityComposer appear to be the answer to that question. By shortening the learning curve, Apple will enable a larger population of developers to begin creating their own AR experiences.

Moreover, Apple's new tools represent a competitive threat to the AR development landscape. RealityKit moves into territory previously reserved for Unity and Unreal Engine, while RealityComposer puts tools from upstarts like Torch and WiARframe on shaky ground.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

Be the First to Comment

Share Your Thoughts