Although early attempts at consumer smartglasses have employed trackpads and handheld or wearable controllers for user input, its the gesture control interfaces of the HoloLens 2 and the Magic Leap One that represent the future of smartglasses input.

A new machine learning model developed by Google's research arm may make it possible to implement the complex hand gesture controls commonly found in high-end AR systems in lightweight smartglasses without the additional bulk and cost of dedicated depth and motion sensors.

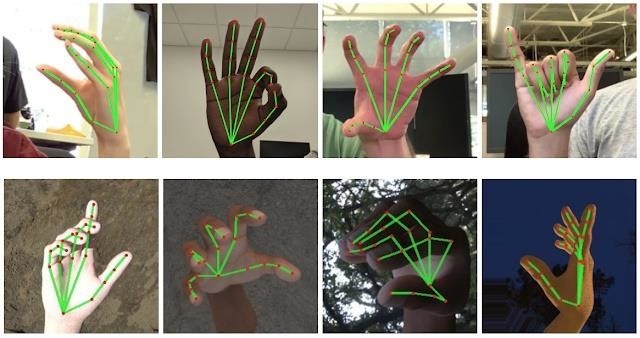

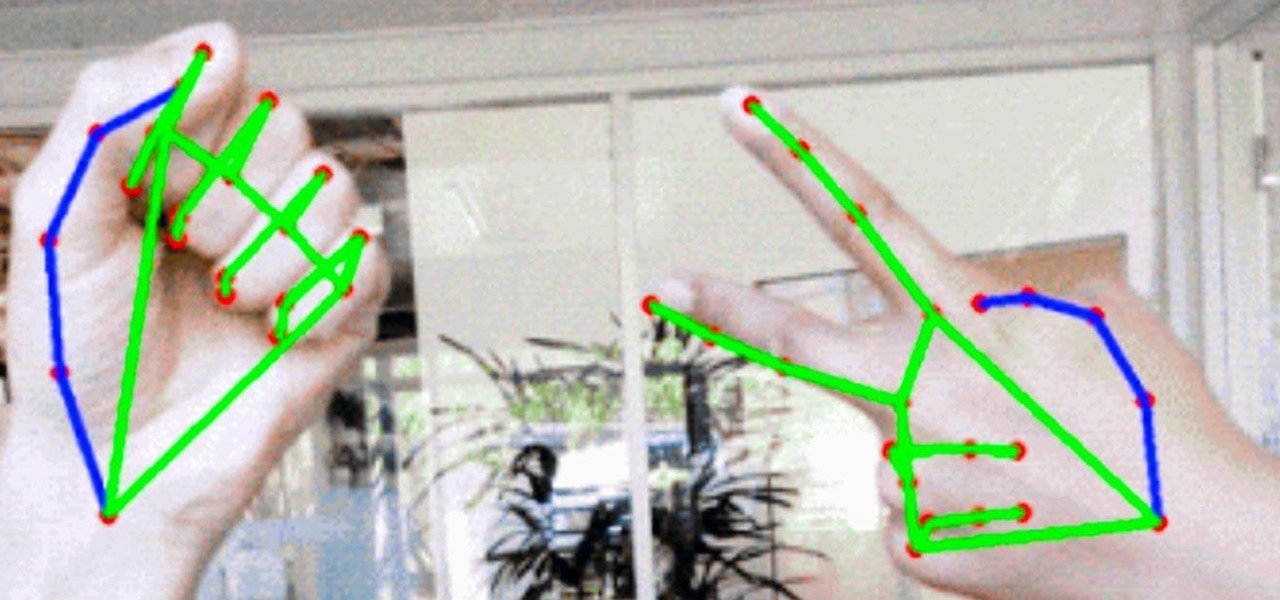

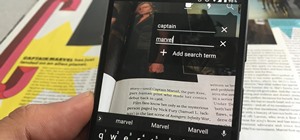

This week, the Google AI team unveiled its latest approach to hand and finger tracking, which uses the open-source, cross-platform MediaPipe framework to process video on mobile devices (not in the cloud) instantly to map up to 21 points of the hand and fingers via machine learning models.

"We hope that providing this hand perception functionality to the wider research and development community will result in an emergence of creative use cases, stimulating new applications and new research avenues," the team wrote in a blog post detailing its approach.

Google's method of hand and finger tracking actually divides the task over three machine learning models. Instead of using a machine learning model to recognize the hand itself, which lends itself to a broad spectrum of sizes and poses, Google researchers instead employed a palm detection algorithm. The team achieved an average rate of precision of nearly 96% using this approach.

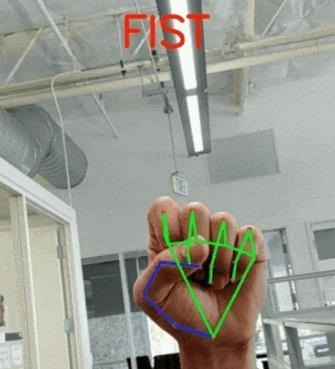

With the palm detected, another machine learning model identifies 21 hand and knuckle coordinate points of the hand or hands within the camera view. The third algorithm infers the gesture in view by recording the pose for each finger and matching it with pre-defined hand gestures, with counting gestures and a variety of hand signs supported.

In other words, this machine learning approach can be applied on Android or iOS devices without dedicated motion or depth sensors. Moreover, the team is making the model available via open source so that other developers and researchers can deploy it. The team also plans to improve the accuracy and performance of the machine learning models over time.

In the immediate future, the hand tracking system could assist developers in building AR experiences similar to those on Snapchat and Facebook that incorporate hand recognition and tracking into selfie camera effects.

Google might also be able to use the tech to work in conjunction with the Soli radar sensor on the Pixel 4 to build some unique AR experiences akin to Animojis on the iPhone X series, which use a combination of Apple's ARKit and its TrueDepth camera.

However, the greater implication inherent in this development is what a machine learning approach can bring to smartglasses. By ditching the motion and depth sensor array, hardware makers could approximate the user input methods on HoloLens 2 and Magic Leap One.

More and more, tech companies are leaning on AI to solve the equation for AR wearables in terms of form factor and functionality. Even Microsoft is blending the AI approach of ARKit and ARcore in detecting surfaces for the new scene understanding capabilities of the HoloLens 2. The software approach could be the key in arriving at smartglasses that are slim enough to wear every day instead of just in the comfort of the user's home or office.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts