In this chapter, we want to start seeing some real progress in our dynamic user interface. To do that, we will have our newly crafted toolset from the previous chapter appear where we are looking when we are looking at an object. To accomplish this we will be using a very useful part of the C# language: delegates and events.

First, before we get into the core of the lesson, we need to create a class for our TransformUITool. This code will be responsible for handling the position, rotation, and scaling of the object as it does its job. It will also react to the events we will be setting up later in this chapter.

Add a New Class to Tools

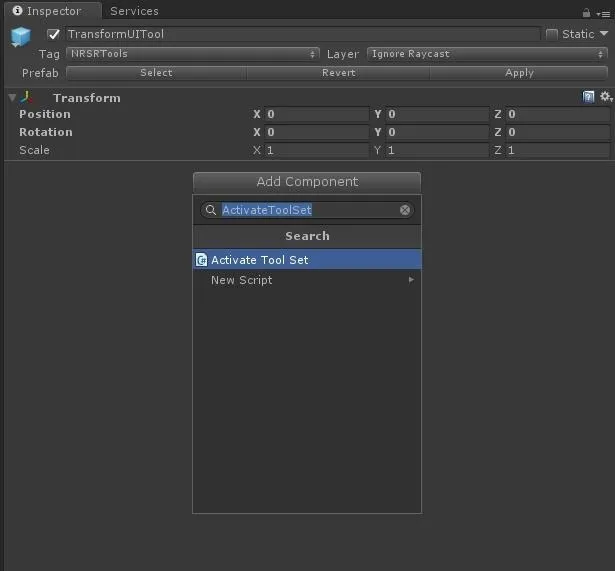

With the TransformUITool object selected, click on the "Add Component" button. Type ActivateToolSet and click on "New Script." Follow that up with clicking on the "Create And Add" button that appears.

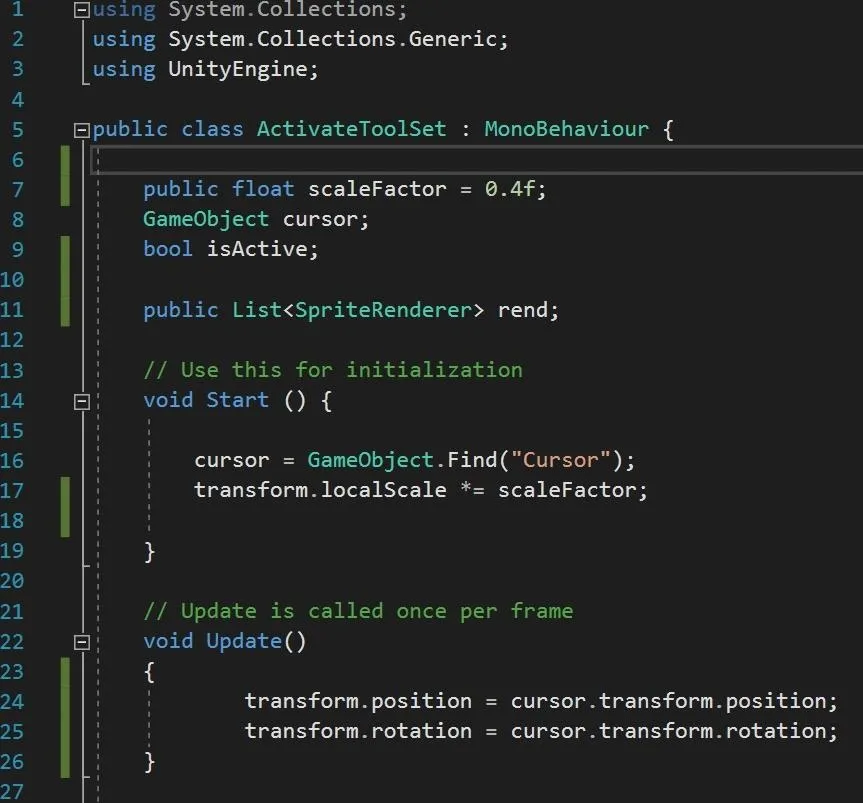

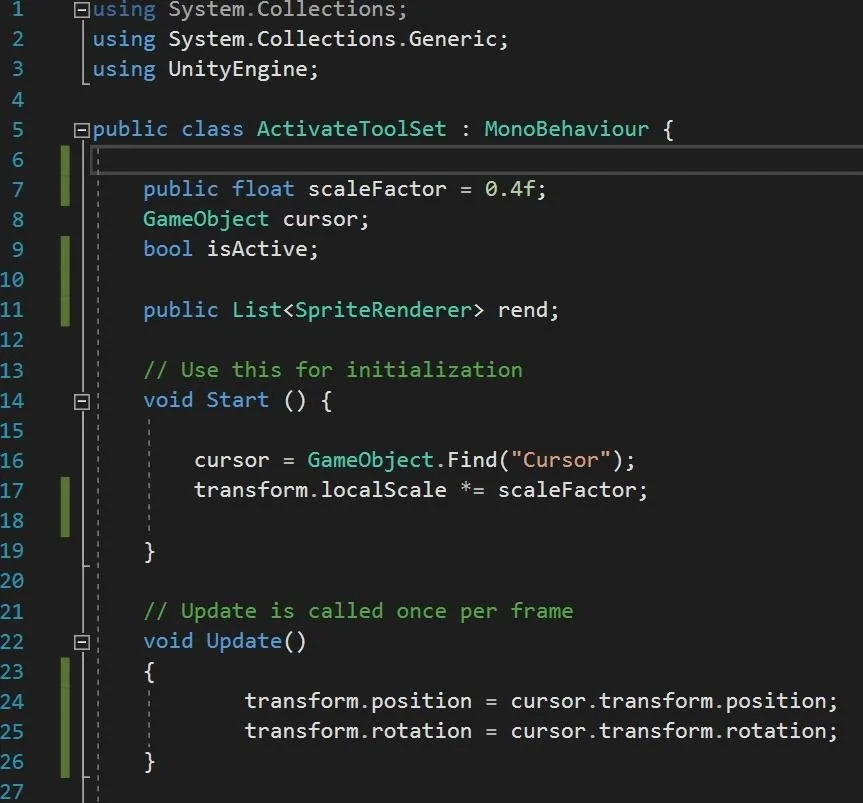

For now, we are just going to do the initial setup for ActivateToolSet. First, we need to declare our variables, or fields and properties.

- We need a public float, which we will call scaleFactor and, while we are setting the default to be 0.4f, we can of course change to whatever we like from inside the editor.

- We need a GameObject to get a reference to our cursor object to use as guidance for our toolset.

- bool isActive - we will need this bool tracking the objects current active status in the future.

- Finally, we have a List<> of SpriteRenderers, and we are going to fill these in ourselves in the editor, so it needs to be public.

That is all we need in this class for now. We will be back to this code in just a bit. Save it and go back to the Unity editor.

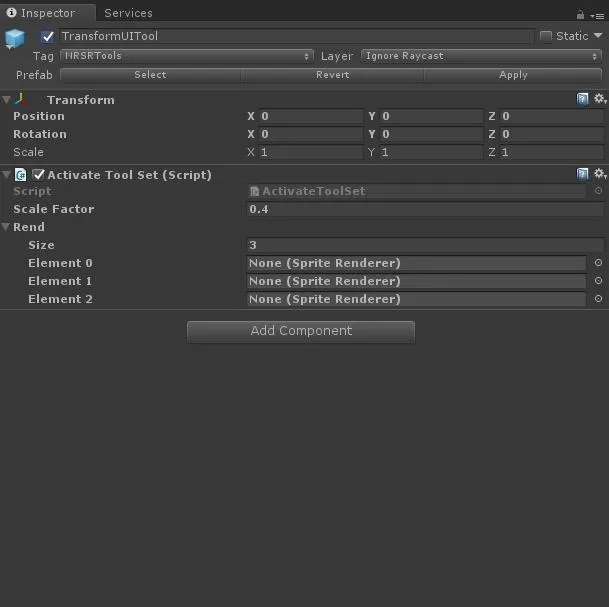

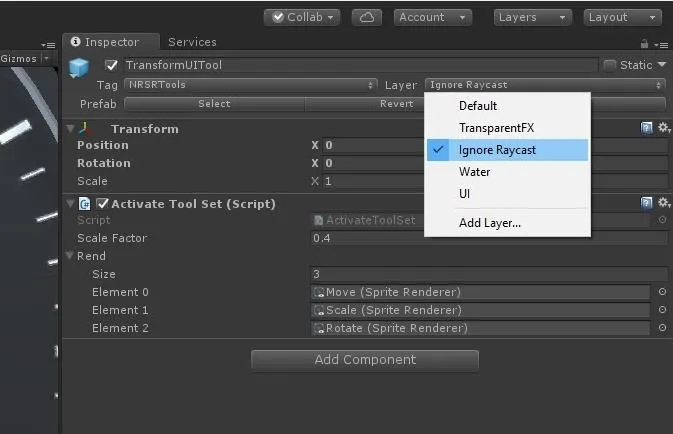

You should now have something that looks similar to this, or at least you will when you type 3 into the size box and tap the "Tab" key.

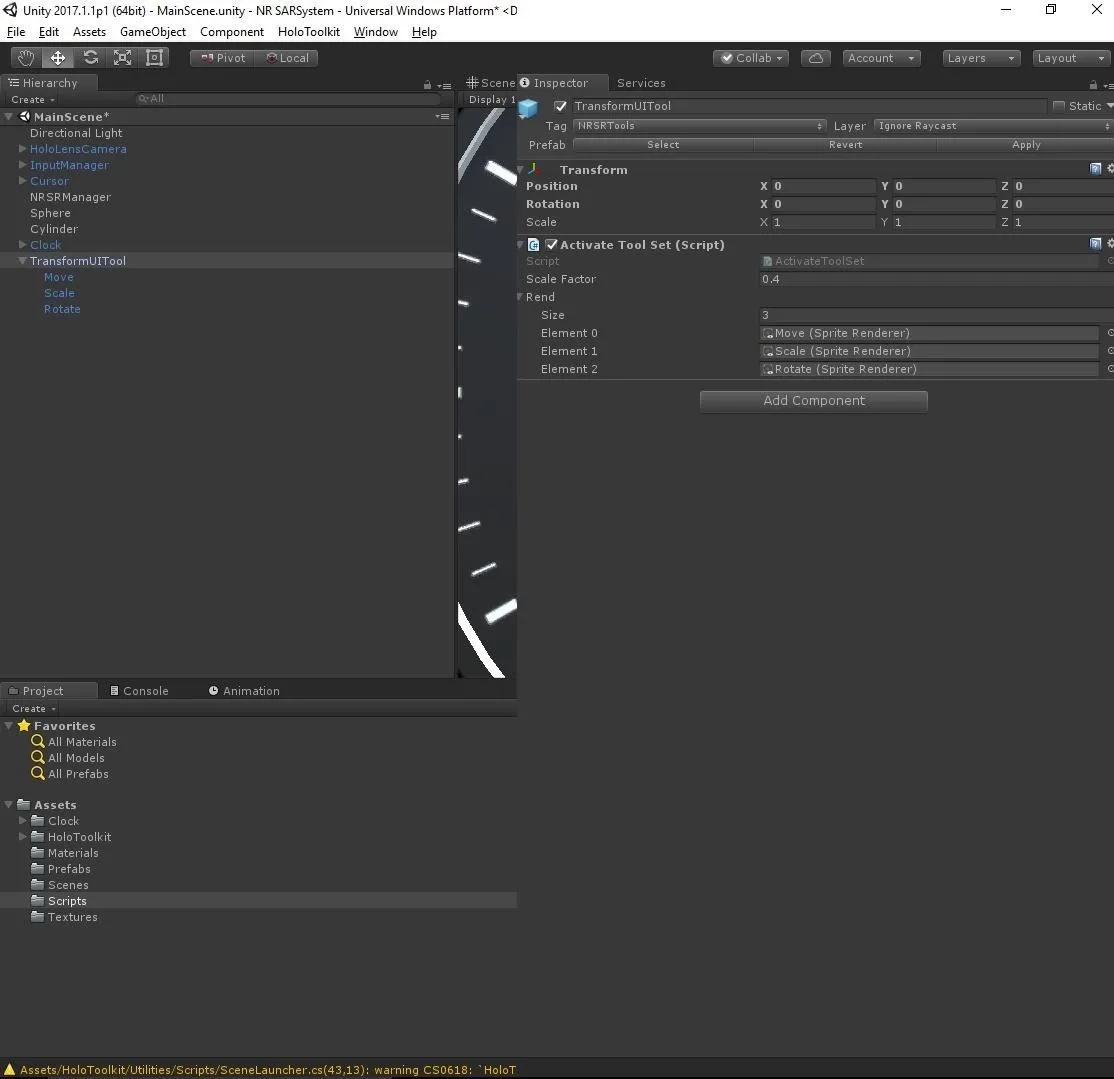

Now take the children of TransformUITool — Move, Scale and Rotate — and drag each one to an open slot in the Rend section of the ActivateToolSet component.

With the TransformUITool still selected, click on the layer drop-down menu and select "Ignore Raycast."

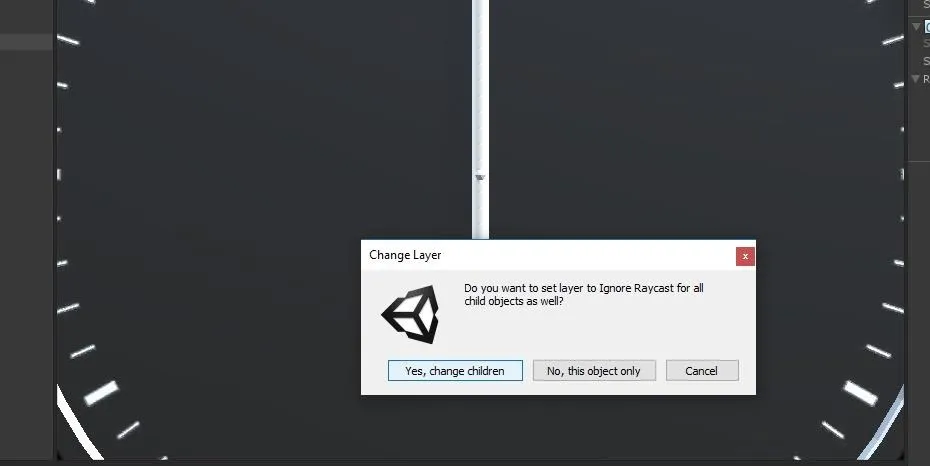

When the box pops up asking about child objects, click "Yes, change children."

Define Delegates & Events

Let's think about the word delegate and how it applies to the real world. After an election year we probably all still remember hearing that word way too many times. A delegate is a person sent to represent others. A person that acts in place of someone else.

In C# a delegate is a reference type, but, instead of referencing something like a GameObject or Renderer, it allows you to reference a method. So, it is a reference to action, rather than strictly referencing data. It makes sense in terms of the original definition.

Here is the syntax for a delegate:

delegate returntype delegateName (parameters);

And here is the one we will be using in our NRSRManager shortly:

public delegate void OnObjectFocused();

You may notice after the word delegate, it looks like a standard method. You could even have a return value and parameters to pass if needed. This is our delegate template. Any method that we want to associate with this delegate must have the exact same signature.

Events go hand and hand with delegates. An event is a message of some action. While it is a response to a condition occurring, it can also be a trigger, which is generally how they are used. The syntax for an event looks like this:

event delegateName eventHandlerResponse;

Another syntax method, as ours will appear in a moment, looks like this:

public static OnObjectFocused ObjectFocused;

What makes delegates and events so powerful is the ability to decouple code. If an object cares about an event happening, it can subscribe to that event. Once it has a subscription to that event, it simply listens for that event to happen and then responds.

At this point, the original code can then change without affecting the subscription, making the code more robust and less likely to fall to time as easily. This can enable a style of programming called black box programming.

What Is Black Box Programming?

The main idea behind black box is we give a box input and receive output in response, what happens between those two points is unnecessary minutiae. As a long-time live sound engineer and musician, this appeals to me, because this very much how the technology surrounding that space is designed. These days, a guitarist routes their sound through a collection of various effects boxes, each with a collection of knobs, sliders, and switches, to arrive at the tone they are looking for.

While there are definitely some arguable and potentially long-term downsides to this approach, it is a great way to start seeing a program as an overall system. Most musicians who really care about the sound they produce start off not knowing what the black boxes do, but they learn in order to maximize their sound and possibly create their own versions of those black boxes.

Delegates & Events in Action

Now, we need to update our NRSRManager to accommodate our new choice in approaches.

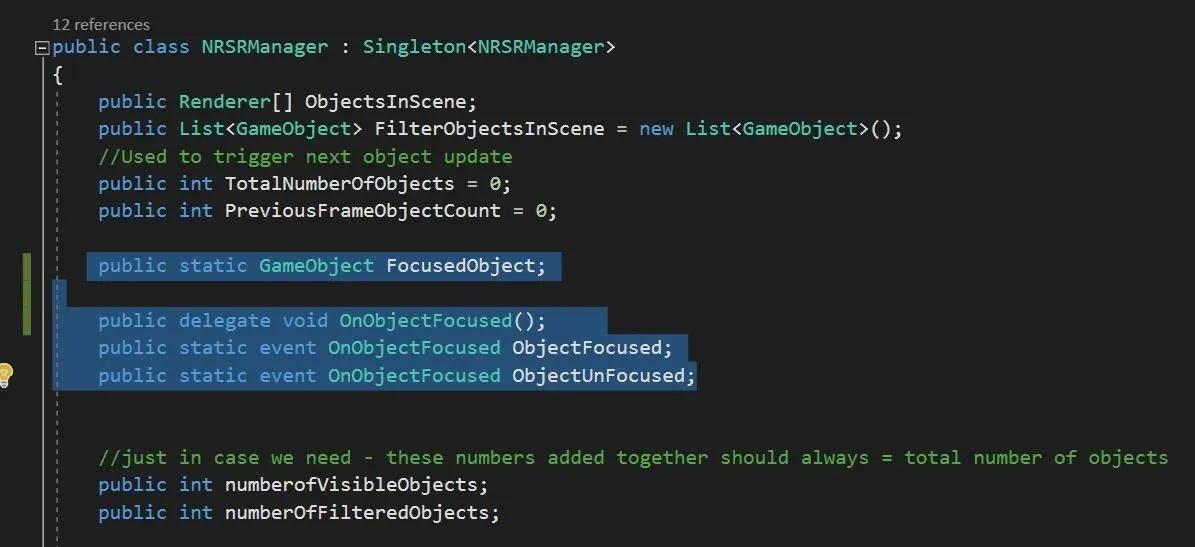

Add the four lines pictured below to the variable declarations of NRSRManager.

Here you can see we are declaring a GameObject, followed by a delegate called OnObjectFocused(). Then we have two static events, called ObjectFocused and ObjectUnFocused. You should notice that they are both reference the delegate OnObjectFocused(). The static keyword makes whatever we are referencing shared amongst all instances.

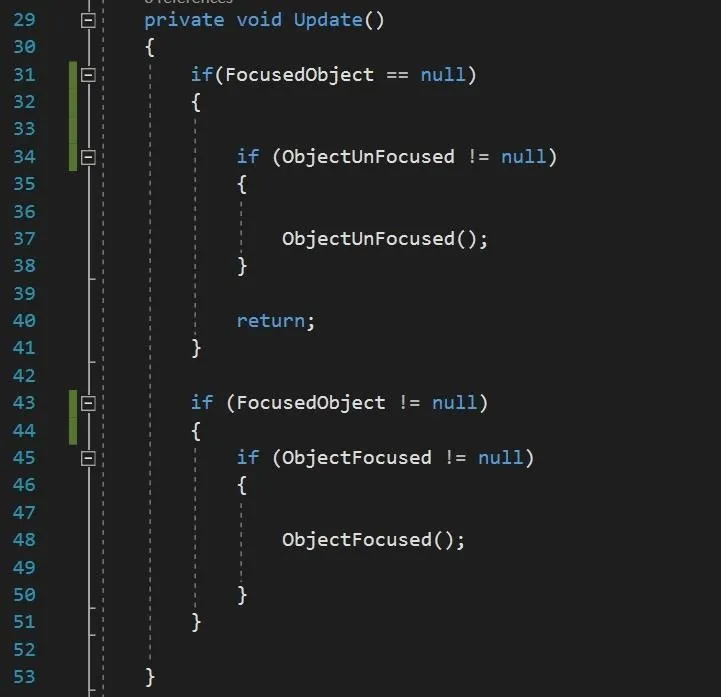

While we already have a FixedUpdate() function from a couple of chapters ago, now we need an Update() as well. While FixedUpdate() runs as a fixed framerate, Update() runs every frame.

Add the following code to Update().

- We check it the GameObjectFocusedObject is null.

- Since NRSRManager is the source of the delegate and event, when we go to call the event, we need to make sure that someone has subscribed to our event. So we check if it is null. If it is we return; otherwise, fire off the event so the subscribers know.

So now we have our delegate and events set up in our manager. We need to set a few classes up to listen or subscribe to the events.

Activate Tool Set

Here in our ActivateToolSet class, go ahead and finish typing out the Start() and Update() methods.

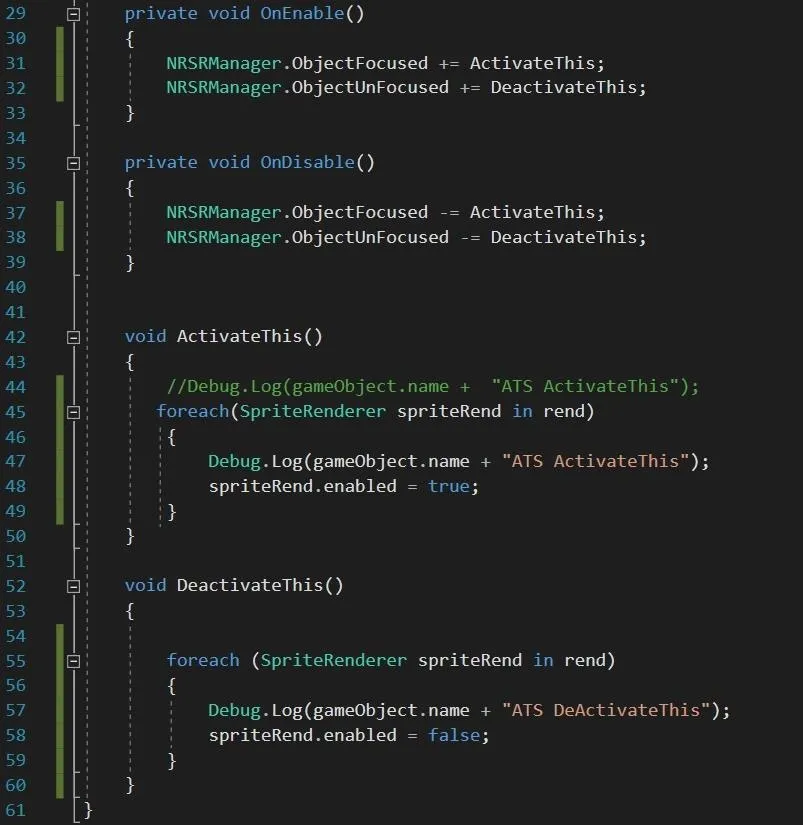

In our OnEnable event, we need to subscribe to the events that matter to us. We do that by calling the event and then attaching a method of our own to it with the += symbols.

What this means is that when the ObjectFocused event happens, ActivateThis will also run. When ObjectUnfocused runs, so will DeactivateThis.

Due to memory management issues, to avoid memory leaks and the like, we also unsubscribe from the events when the class is disabled. Much in the same way as subscribing, unsubscribing requires the use of the -= symbol combo.

Type out the rest of the class. You will see how ActivateThis is a loop that turns on all of the SpriteRenders in our List<> and DeactivateThis does the opposite. How very symmetrical of me.

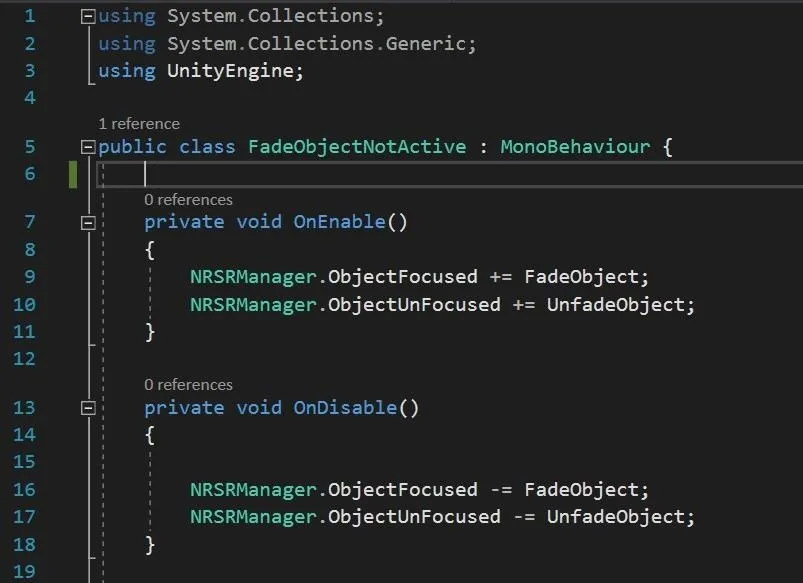

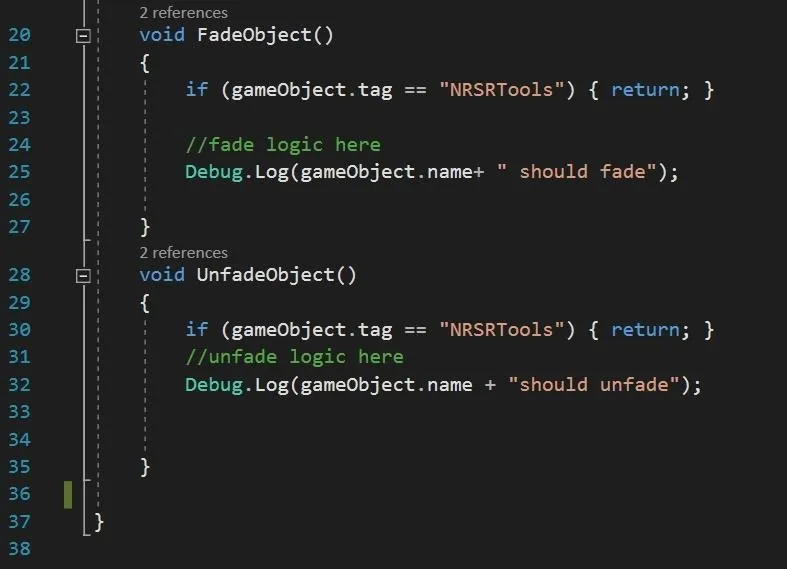

Add FadeObjectNotActive

Now, FadeObjectNotActive is another class that we will be subscribing to ObjectFocused and ObjectUnfocused. We won't be actually implementing the fading code yet, as that is for another lesson. This is another powerful element of delegates and events, though; the ability for multiple objects to subscribe to the same event offers a lot of flexibility in design.

As you can see currently this does not do much other than a debug.log message to let us know it is working.

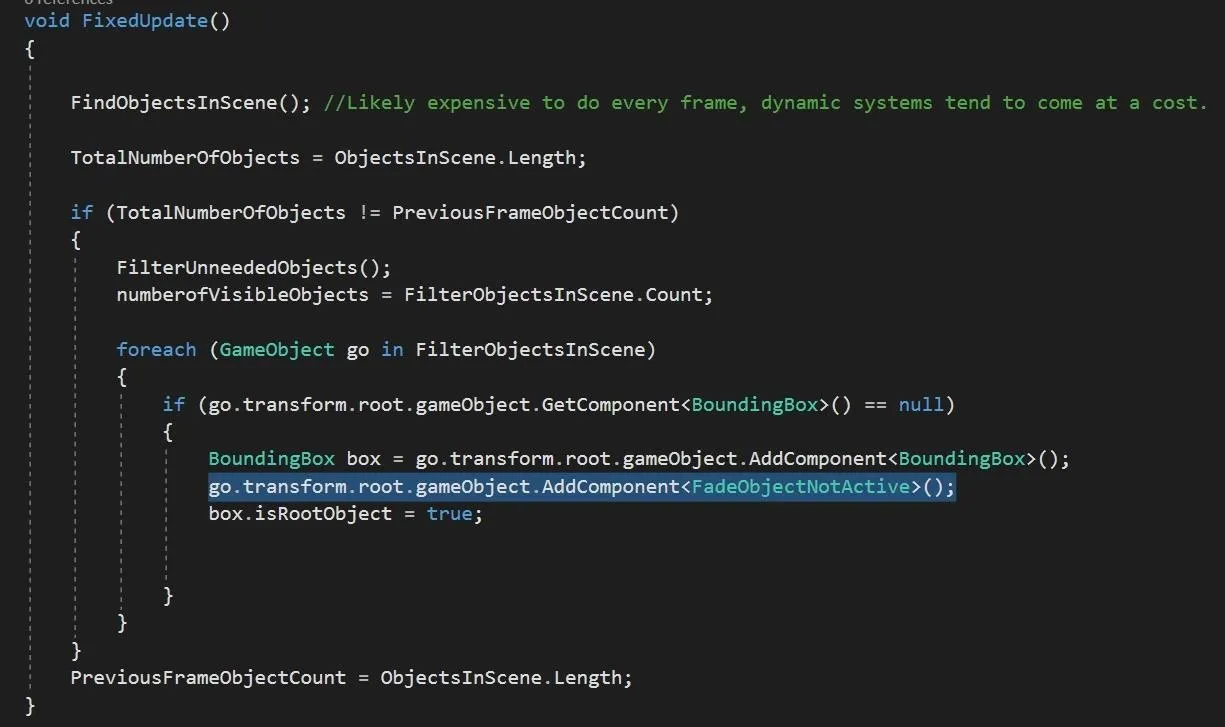

Now that FadeObjectNotActive exists we need to change the FixedUpdate() in NRSRManager to add it to the objects that we need it to have.

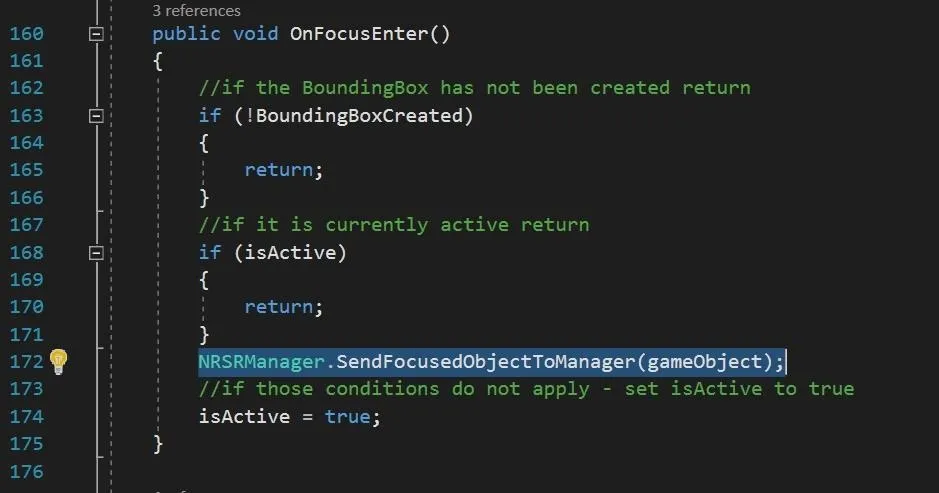

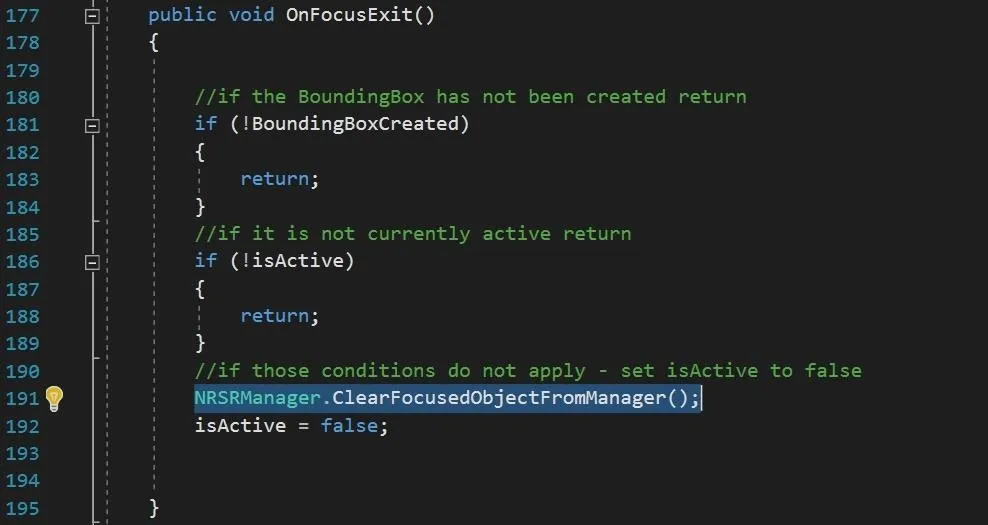

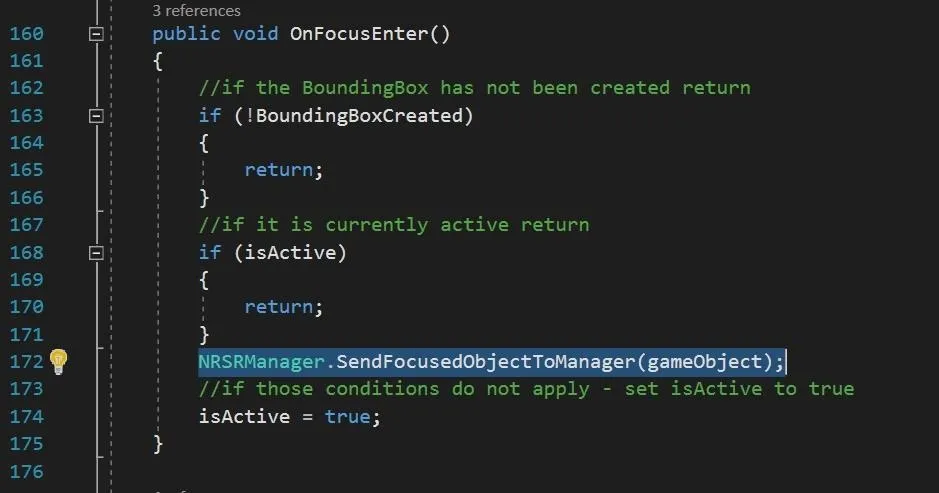

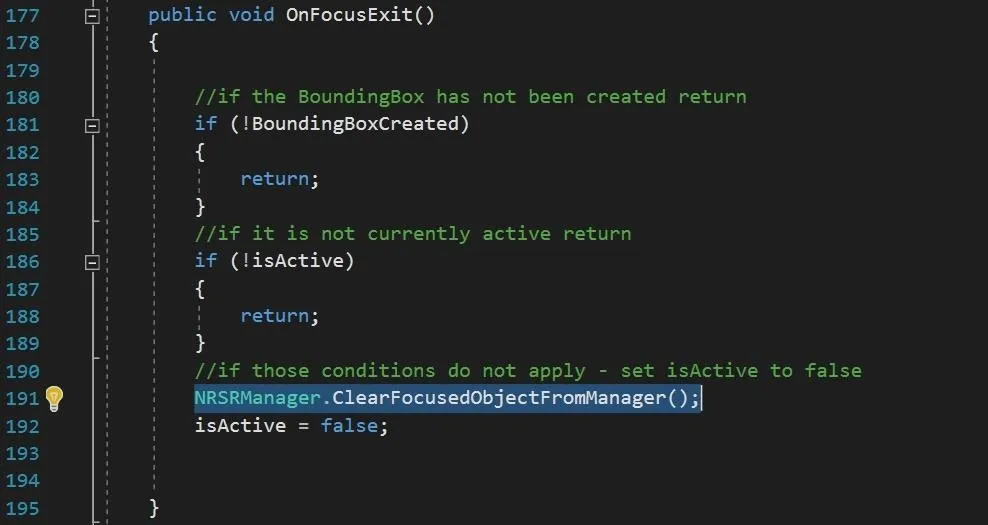

And finally, we need to add two lines to our BoundingBox class. In OnFocusEnter() and OnFocusExit(). These tell the NRSRManager which objects are the currently selected object. And clears that information when the gaze is pulled away.

Now if you compile and run, you will see that the objects that we gaze at now have our tools appearing with them. And when we gaze away they disappear.

So, in the end, this is how we are using delegates and events for our project.

- When an object is focused on by the user, BoundingBox tells NRSRManager which object is the FocusedObject.

- NRSRManager, now that it's FocusedObject is no longer null, checks the ObjectFocused event and if it's not null runs it.

- ObjectFocused event fires off and two things happen. One, ActivateToolSet triggers its stored method ActivateThis(), loops through the renderers and enables them so we can see our toolset. Two, at the same time, FadeObjectNotActive fires off its FadeObject() method. Currently, this method checks to make sure the object is not NRSRTools and if it isn't it posts a Debug.Log().

The thing is that we could have as many methods as well need to trigger off of that event, which makes this a very powerful system.

And as usual, for those that do not want to type everything out:

Head-locking in mixed reality is a no-no. And before we can really use the tools to do their job we need to adjust that. In the next lesson, we are going to make it so the tools lag a little behind our gaze.

- Follow Next Reality on Facebook, Twitter, and YouTube

- Sign up for our new Next Reality newsletter

- Follow WonderHowTo on Facebook, Twitter, Pinterest, and Google+

Cover image via Jason Odom/Next Reality

Comments

Be the first, drop a comment!