Every step in the evolution of computing brings an in-kind leap forward in user input technology. The personal computer had the mouse, touchscreens made smartphones mainstream consumer devices, and AR headsets like the HoloLens and the Magic Leap One have leveraged gesture recognition.

Now, one new startup is looking to revolutionize the whole spectrum of human-computer interfaces (HMI) with not one but five natural input methods working in concert.

On Tuesday, Asteroid launched a crowdfunding campaign for its 3D Human-Machine Interface Starter Kit, which supplies developers with the software and hardware for creating apps controlled by eyes, hand gestures, and even thoughts and emotions.

- Don't Miss: To Win This Obscure Star Trek Game on the HoloLens, Players Need to Control Their Stress Levels

"Imagine an eye tracker being used in a 3D modeling program. It can tell which part of the 3D model you're focusing on, and may be automatically zoomed in," said Saku Panditharatne, founder and CEO of Asteroid, in a blog post.

"It might use a trained AI to guess from your eye fixation behavior to guess what menu you want to open, and automatically click through the menus to get to the action you want. If the computer gets it wrong, you can manually say so, maybe by pressing a 'cancel' button."

For a pledge of $450, the team says backers will receive the Focus eye tracker, Axon brain-computer interface, Glyph gesture sensor, Continuum linear scrubber, and Orbit hand controller, each powered by a nine-volt battery pack and a Bluetooth component for connecting to PCs, smartphones, or tablets.

Mounted on a pair of plastic eyeglasses frames, Focus consists of a pair of high-resolution, high-speed USB cameras, a Raspberry Pi board, a Bluetooth component, and a battery. The eye tracker, which is available via a standalone pledge of $200, is capable of interpreting user intent and attention based on eye movement.

Focus eye tracker

"With a mouse, you send one click every few seconds. An eye-tracker may be able to infer several bits of information about your attention and intent multiple times per second," said Panditharatne.

Axon is comprised of six head-worn electrodes connected to an Arduino board. The device processes brain signals, which can be used to interpret user intent, as well as emotions.

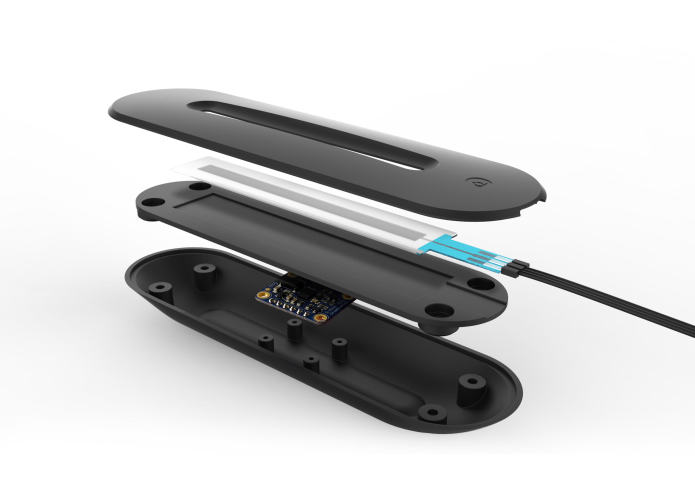

The final three components, Glyph, Continuum, and Orbit, connect to a single Arduino board. Glyph uses an electric field sensor to interpret hand gestures. Continuum employs a sliding resistive controller that enables fine scrubbing with touch input. Finally, Orbit is an acrylic wand that acts as a handheld controller capable of nine degrees of freedom (obviously destined for some Harry Potter-like magical hijinks).

Continuum and Orbit share the same plastic enclosure.

With the accompanying Mac OS software, developers can create nodes that tie sensory inputs to actions within an application. For example, the movement of the user's eye can control the position of a cursor in three-dimensional space (not just horizontal and vertical coordinates). These nodes are then packed into an interaction file, which can be loaded into a Swift mobile app (which, in turn, would enable developers to create ARKit apps).

"The process of putting your thoughts into a computer may be ten times or even a hundred times faster than with just a mouse. If it took 10 hours to create a 3D model before, a 100x reduction is six minutes," said Panditharatne.

While the hardware and software are designed for mobile devices, Panditharatne has an eye towards augmented reality and virtual reality headsets, where three-dimensional spaces open up a wider realm of possibilities, such as 3D design.

"The 'display' part of a human-machine interface shows the user a working copy of what they're making," said Panditharatne. "AR/VR headsets allow for much richer, wide field-of-view rendered displays compared to smartphones or laptops. In the 3D modeling example, a headset display would allow for viewing the working model in much more detail. AR/VR can also be combined with haptics to create a display that looks and feels like it's made up of real physical objects, which makes interaction feel all the more natural."

Designing a 3D car model like this one can be done much faster via Asteroid's HMI kit.

AR evangelists and leaders, like Magic Leap CEO Rony Abovitz and Microsoft technical fellow Alex Kipman (or any other of the influential minds among the NR30) often speak in utopian terms about augmented reality as the next major revolution in computing. As such, the new paradigm of "spatial computing" will look for new methods of user input, which is where companies like Asteroid fit in.

"What's interesting about emerging human-machine interface tech is the hope that the user may be able to 'upload' as much as they can 'download' today," said Panditharatne. "The most promising application is in augmented creativity — where the user works with a computer to design something new."

Of course, Asteroid is not alone in its pursuit of the next evolution of user input in the context of augmented reality. Tobii is working to integrate its eye-tracking technology into smartglasses. And Neurable has developed an SDK for brain-control interfaces, while CTRL-Labs has attracted investment from Google and Amazon for its nerve-tapping technology. And it's also now clear that Leap Motion has helped a generation of AR developers and tinkerers leverage gesture recognition.

However, while Magic Leap has notably included handheld controllers, gesture recognition, and eye tracking in Magic Leap One, Asteroid's HMI kit may be the most ambitious attempt yet to move human-to-computer interaction forward for the augmented reality era.

- Follow Next Reality on Facebook, Twitter, Instagram, YouTube, and Flipboard

- Sign up for Next Reality's daily, weekly, or monthly newsletters

- Follow WonderHowTo on Facebook, Twitter, Pinterest, and Flipboard

Cover image via Asteroid

Comments

Be the first, drop a comment!