Niantic first unveiled its AR cloud back in 2018, using it to enhance the immersive capabilities of its gaming portfolio.

Now, the company is expanding access to the system, allowing third-party developers to take advantage of next-generation augmented reality features for their mobile apps.

- Don't Miss: Pokémon GO Developer Niantic Scores $245 Million in Funding to Fast-Track Real World Platform

The platform, which was previously known as the "Niantic Real World Platform," is also getting a new name: Lightship. Niantic opened up the refreshed developer kit as a private beta this week. At launch, Lightship will enable developers to leverage real-time mapping, semantic segmentation, and multiplayer support.

"Niantic is building the 3D map of the world hand-in-hand with its player base to power new kinds of immersive AR experiences," said Kei Kawai, vice president of product management for Niantic, in a statement. "With the Niantic Lightship Platform and ARDK, we want to provide the richest selection of development tools and technologies, so any developer can create their own unique experiences."

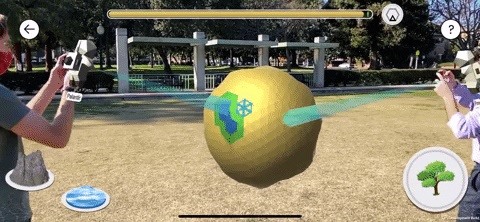

With real-time mapping, Lightship will be able to utilize neural networks to create a mesh via a common smartphone camera (however, the feature enjoys even better accuracy on iPhones with LiDAR sensors). Semantic segmentation enables apps to recognize real-world environments, such as ground, sky, and buildings. With this data, developers can give AR experiences the dynamic of occlusion, where virtual content appears to move in front of and behind physical objects realistically. Finally, the multiplayer feature will allow apps to network between users and create a shared environment where up to eight players can interact with the same virtual content.

One of the features still missing from Lightship is persistent content, as Niantic is still building its Visual Positioning System. Last year, the company made its first steps toward creating its crowdsourced 3D map for persistent content with the introduction of AR Mapping tasks in Pokémon GO (however, the feature was met with some backlash from the gaming community for offering less than desirable rewards for the extra effort the scanning tasks require).

Niantic has already begun integrating the platform into Pokémon GO, Ingress, and Harry Potter Wizards Unite, as well as the forthcoming Pikmin adaptation for Nintendo. The platform runs features like Adventure Sync for background step tracking as well as Shared AR mode and Reality Blending for AR experiences in Pokémon GO.

The company began accepting applications for limited access for developers to Real World last year via its Creator Program. Now, interested developers can request access to the private beta through the Niantic developer portal.

In the three years since Niantic unveiled its AR cloud plans, the segment has gained considerable competition. For example, Snap recently acquired a 3D mapping startup to jump-start its own AR cloud platform, and Epic Games has raised funding to develop its own vision of the Metaverse. Additionally, Facebook has teased its own AR cloud vision, while Ubiquity6 and Samsung have launched their own apps with AR cloud features. Apple and Google have also added similar AR cloud features to their respective ARKit and ARCore toolkits, including multiplayer support, persistent content, and depth mapping for occlusion and precision anchoring.

Despite the crowded field, Niantic does have certain advantages, most notably, a user base to help build its 3D map, and vast experience with creating geolocated experiences. While AR gaming has seemed to start and stop with Pokémon GO, a new platform like this that pushes the boundaries of what's possible for mobile AR could create more robust experiences ahead of the advent of smartglasses.

Just updated your iPhone to iOS 18? You'll find a ton of hot new features for some of your most-used Apple apps. Dive in and see for yourself:

Be the First to Comment

Share Your Thoughts