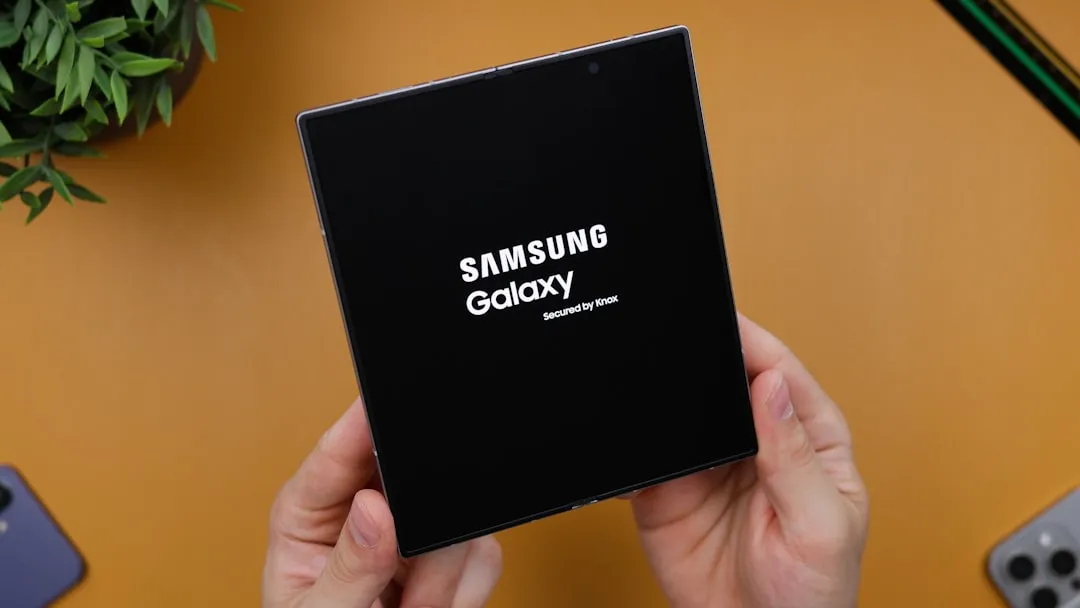

Apple announced their new iPhones today, and the 7 Plus features two camera lenses on its backside. That could push smartphone photography ahead in a major way. It may also serve as the basis for their foray into virtual, augmented, and mixed reality.

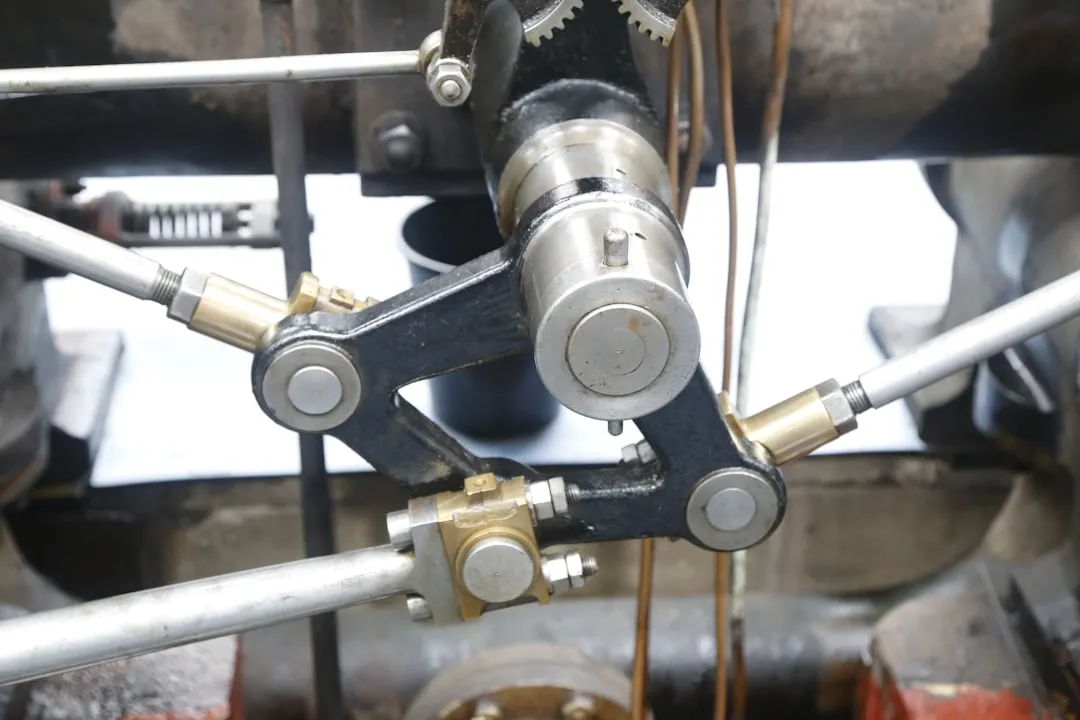

HTC Vive Lighthouses

A lot of things make the Microsoft HoloLens and HTC Vive impressive technologies, but motion tracking and spatial mapping are right up at the top of the list.

The HoloLens uses a series of cameras and a depth sensor on the front of the headset to see what you see and map out your three-dimensional space. The Vive utilizes two base stations they call lighthouses, which people often believe to be cameras. Instead, the camera exists on the Vive headset and picks up a flood of infrared light that those lighthouses emit. Both gather data from multiple sources, combining imaging and infrared technologies to function.

The iPhone 7 Plus' dual-lens camera.

So how does Apple's hardware stack up?

As you might expect, either from history or from their focus on removing as many parts they deem non-essential as possible, no iPhone model does anything with infrared light other than filter it out in your photos. That said, Apple's stereoscopic camera configuration is enough to estimate depth. Furthermore, they only need to add additional sensors to their headset design and the phone will have access to the additional features it needs for more robust spatial mapping.

Of course, this is pure speculation. If you can count on one thing, Apple will do whatever Apple wants to do. Just because the signs are there doesn't mean it'll happen, but given their stated interest in augmented reality and that the iPhone 7 Plus' capable technology is well suited for AR- and MR-related tasks, there's a clear path for their first product in this space—someday.

- Follow NextReality on Facebook, Twitter, and YouTube

- Follow WonderHowTo on Facebook, Twitter, and Google+

Cover image via Apple

Comments

Be the first, drop a comment!